15 new grantees to hold governments, employers and tech companies accountable for AI harms in Europe

The European AI & Society Fund has awarded €2 million to 15 bold, adventurous projects that explore how to hold accountable those misusing AI and causing harm in Europe. Defending workers’ rights, holding data centre operators accountable for environmental and social damage, and tackling AI-enabled profiling affecting black and migrant communities, are just some of the projects groups will be implementing over the next year with funding from the AI Accountability grants.

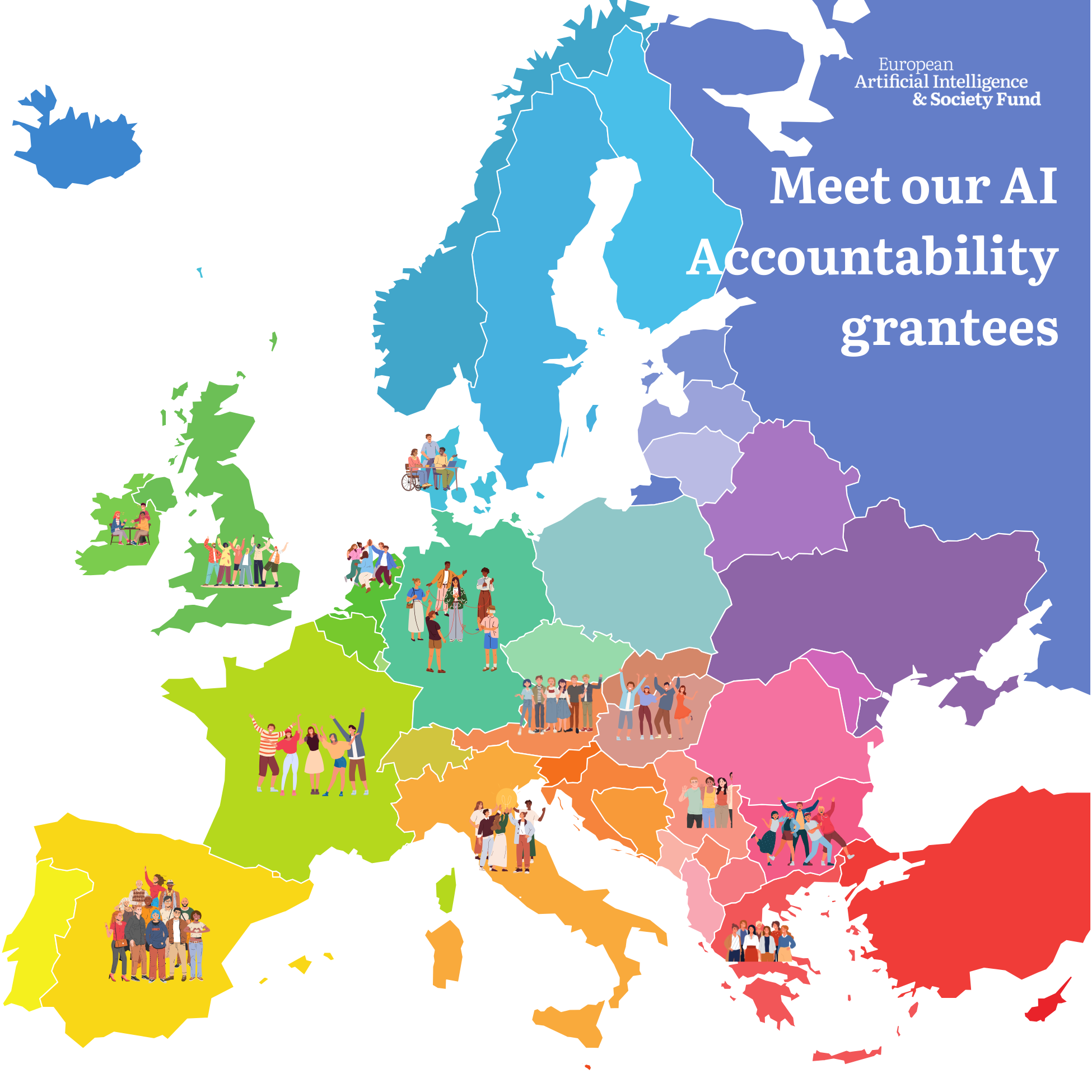

The 15 new projects involving a total of 35 organisations will focus their efforts on AI accountability in Austria, Bulgaria, Denmark, France, Germany, Greece, Hungary, Ireland, Italy, Netherlands, Serbia, Spain, and the UK. As well as a grant up to €200,000, grantees will receive capacity building support and join a community of practice to learn about participatory community engagement, data requests, investigations, AI-harm reporting, and strategic litigation.

The AI Accountability grants are part of a €4 million “Making Regulation Work” Programme that aims to support civil society organisations to challenge AI harms, and hold governments, employers and tech companies accountable for the misuse of AI in Europe.

325 proposals were submitted from across Europe, with the majority coming from non-tech focused organisations. The AI Accountability Fund actively encouraged proposals from different sectors to foster cross-field collaboration. Find out more about the types of proposals we received.

Meet the AI Accountability Fund grantees:

AI Forensics, €160,000, Europe

AI Forensics is a European non-profit that audits influential and opaque algorithms to hold major tech platforms accountable. They conduct independent investigations, develop evidence collection tools, and inform policymakers with data-driven insights.

With this grant, they will leverage the use of Article 40 of the Digital Services Act (DSA) to access platform data, assess AI systems, and learn how to best leverage this new transparency mechanism. They will share their insights with regulators and civil society, strengthening AI accountability.

Amnesty International, €160,000, Serbia/Western Balkans, Denmark, Netherlands

Amnesty International is a global movement of 10 million people committed to ensuring human rights for all. Amnesty Tech works to ensure technology advances rights, not erodes them.

They will build on their Algorithmic Accountability Lab’s work interrogating how AI systems in welfare provision disproportionately affect marginalised communities and their rights, and test accountability mechanisms. They will leverage the expertise and power of the Amnesty movement to carry out bold research, advocacy, strategic litigation, and communications.

Black Learning Achievement and Mental Health(BLAM UK), €159,999, UK

Black Learning Achievement and Mental Health UK (BLAM UK) is an award-winning charity dedicated to championing Black British culture, improving the wellbeing of people of African descent, and promoting a decolonised education system.

With this grant, they aim to protect Black communities from harm caused by AI-driven policing tools, like predictive policing and facial recognition. They will advocate for policies that ensure accountability and prevent injustice as AI technology evolves.

Border Violence Monitoring Network, €194,000, Serbia, Greece, Bulgaria, Europe

The Border Violence Monitoring Network is a network of organisations documenting pushbacks, violence and other rights violations at EU external borders. Given the substantial EU funding allocated to border surveillance technologies, including AI-driven tools, they will document the impact of this new techno-solutionist approach on people on the move. Based on evidence and research, they will contribute to the creation of accountability and redress mechanisms for those affected by harmful AI in border control.

The Bureau of Investigative Journalism, €133, 296, Europe

The Bureau is an independent, non-profit newsroom that sparks change through impact-led journalism. They have a strong track record of collaborative, high impact investigations into surveillance and workers’ rights.

This grant will support accountability for Big Tech’s role in AI-driven surveillance and repression that targets marginalised communities and at-risk citizens. By exposing their wrongdoing and delivering the evidence needed to challenge it, they will empower stakeholders such as European legislators, regulators, litigation partners and civil society organisations to take action.

Friends of the Earth Ireland, €108,000, Ireland

Friends of the Earth Ireland is part of the world’s largest grassroots environmental network, campaigning for a just world free from pollution. This project aims to establish a system of accountability for AI-powered data centre growth in Ireland.

Despite introducing legally binding carbon budgets in 2021, the Irish Government has pursued an economic model heavily reliant on data centre expansion, largely driven by the rise of AI. Data centres have emerged as the largest driver of electricity demand in Ireland, consuming a share of all energy three times higher than in other EU countries.

The Migrant Justice Community of Practice coalition, €120,000, Greece, Germany

The Migrant Justice Community of Practice (MJCoP) is a coalition of migrant-led organisations working to shift European migration policy toward community care and social provision. It focuses on building power amongst migrant and racialised-led justice organisations who are commonly under-funded and excluded from decision-making processes. The MJCoP is coordinated by the Greek Forum of Migrants, the International Women* Space and Equinox Initiative for Racial Justice. With this grant, they will develop strategies to address AI-based harms impacting migrant communities in Europe.

INTERET A AGIR, €200,000, France

INTERET A AGIR (IAA) is a collective of legal experts on a mission. IAA empowers civil society to use the law as a tool for positive change, defending fundamental rights and the public interest through strategic litigation.

IAA and its partners aim to highlight and reduce the systematic violation of AI workers' fundamental rights in today's globalised economy, using two legal frameworks: General Data Protection Regulation and the corporate duty of vigilance.

K-Monitor Association - Danes Je Nov Dan - Watchdog Poland, €132,080, Hungary, Slovenia, Poland

Governments increasingly use AI for Automated-Decision Making (ADM) to improve the quality and efficiency of public services. However, these technologies also hold serious risks, such as contributing to biased decisions, limiting privacy and privatising public services.

Civic tech and transparency initiatives Danes Je Nov Dan (SI), K-Monitor (HU), and Watchdog Poland will work on improving the accountability of government AI/ADM use and explore how to make FOI laws and frameworks fit use of digital technologies by public administration.

Lighthouse Reports, €160,000, Europe

Lighthouse Reports is an award-winning investigative newsroom known for its work on migration, surveillance and AI. Their team is drawn from multiple disciplines, including data science and visual forensics. Their collaborative investigations engage decision-makers, civil society, litigators and others in broad “communities of accountability”. Through this grant they aim to use their experience at the intersection of technology, surveillance and migration to do collaborative reporting with affected communities that reveals any disparate impacts of AI deployments.

Observatorio de Trabajo, Algoritmo y Sociedad, €132, 757, Spain

Observatorio de Trabajo, Algoritmo y Sociedad (Work, Algorithm and Society Laboratory) keeps track of how the platform economy and the algorithmic organisation of work transforms labour, economies and politics. They seek to empower workers and democratise these new work environments, which often rely on biased algorithms and automated decision systems for hiring decisions, monitoring and surveillance, and work management.

With this grant, they will support informal riders who deliver food via the Glovo platform to access their rights, in light of Spain’s “Rider Law” which came into effect in 2021. They will investigate how Glovo bypasses Spanish regulations, exploits workers and blocks their social security rights.

Privacy Network – StraLi, €65,000, Italy

Privacy Network is an Italian organisation specialised in digital rights protection, working to ensure technology serves society while preserving fundamental rights. StraLi is an Italian NGO whose core activity is the protection of fundamental rights and freedoms through strategic litigation.

With this grant, they will carry out work that enhances transparency and accountability in the use of SARI (Automatic Image Recognition System) by law enforcement and judicial authorities in Italy.

Reversing.Works, €80,000, Italy, Spain, Germany, Austria

Reversing.Works use technical expertise and multidisciplinary knowledge to empower workers and protect their rights from automated decision-making, surveillance, and opaque technology. They've proven their strategy and skill with a four-year lawsuit that bridges the skills gaps between labour, privacy, and software security experts.

Now they want to continue to bring their technical insights to the labour field. With this project, they aim to lower the barrier of entry so that activists (unions, councils, or workers themselves) can get support and help in getting their boss – the platform - to respect workers' rights.

Think-tank for Action on Social Change (TASC), €59,450, Ireland

Think-tank for Action on Social Change (TASC) - Ireland’s only independent think tank focused on social equality - conducts research, drives public debate, influences policy, and supports grassroots change. Leveraging its expertise in economic inclusion, democracy, climate justice, health, and technology, TASC will examine the socio-environmental challenges faced by West Dublin communities hosting data centres.

Through a participatory democracy model, TASC will empower communities to advocate for their interests and hold data centre operators accountable for their impact on “sacrifice zones”, where deprivation and high pollution intersect.