How ready are social and tech justice organisations to test and use Europe’s AI accountability regulation?

What we’ve learnt from our AI Accountability funding round.

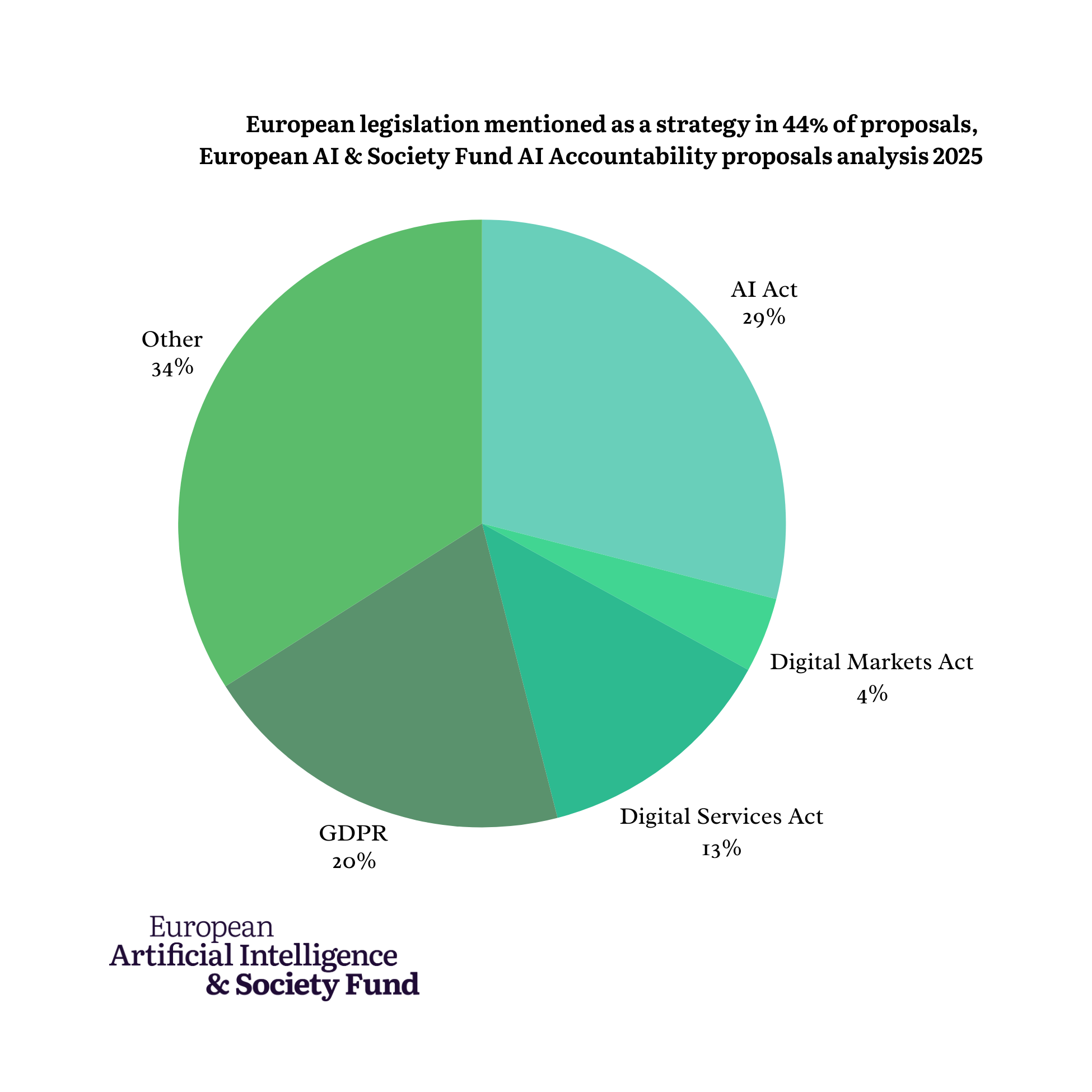

In the face of polluting data centres, harmful social media algorithms, and discriminatory automated decision making systems, the European Union has put a swathe of new regulations in place to rein in AI and its use (and abuse) by big tech, governments and employers: The Artificial Intelligence Act, the first global attempt to comprehensively regulate AI; the Digital Markets Act, aimed at addressing tech companies’ market power; and the Digital Services Act, which regulates platforms. Alongside Europe’s existing equalities, rights and consumer and worker protection legislation, these and other initiatives have created a new set of tools to challenge harms caused by the use of AI and set precedents for responsible innovation that benefits people and society.

Public interest advocates, including European AI & Society Fund grantees, achieved significant victories during the development of these laws, such as prohibitions on the most harmful uses of AI in the AI Act. But with powerful corporate lobbying and the realpolitik of European Union wrangling, the final legislation doubtless falls short in many aspects. For instance the AI Act allows companies to self-determine the degree of risk their products pose. Nonetheless this legislation presents a new opportunity to achieve some degree of new accountability over AI technologies. But only if the rules are enforced and implemented robustly.

What we learned from our AI Accountability grants

That’s why the European AI & Society Fund launched our €4 million Making Regulation Work programme to ensure new and existing rules deliver accountability in practice. In February, we awarded €400,000 across 10 AI Implementation grants to organisations working to ensure social justice is central to the implementation of the European AI Act.

We’ve also just announced the grantees for our AI Accountability funding round, distributing €2 million worth of grants to 15 bold, adventurous projects that explore how to hold accountable those misusing AI and causing harm in Europe. Our open call for proposals resulted in 325 proposals – a record for the Fund’s grantmaking. After reviewing, analysing and assessing all of them, it’s clear that there is a huge demand for funding for this work. But how ready are public interest groups to put Europe’s rules and regulations to the test?

Here are four insights from the proposals we received to help inform future philanthropic activities:

1. There is huge demand for funding to hold AI accountable in Europe

A total of €46 million was requested across 325 applications, which goes to show there is huge appetite for funding and support to hold AI accountable.

Proposals came mostly from the UK, Netherlands, Belgium and Germany, where relatively strong funding landscapes have nurtured public interest groups for some time. Applications from France saw a significant increase compared to our previous open calls, which could indicate a more active civil society sector. We were pleased to see a glut of applications from the Balkans and Eastern Europe, possibly a ripple effect from the European AI & Society Fund’s support for other groups in the region.

We actively encouraged organisations and coalitions from different sectors to apply together. The intention behind this was to combine strengths and knowledge across the social justice and tech accountability field. However, we noticed that many project partnerships tended to be composed of similar organisations from similar sectors. Grantees will be invited to join a community of practice which should help with these cross-field connections.

2. Social justice organisations recognise how AI shapes the issues they work on

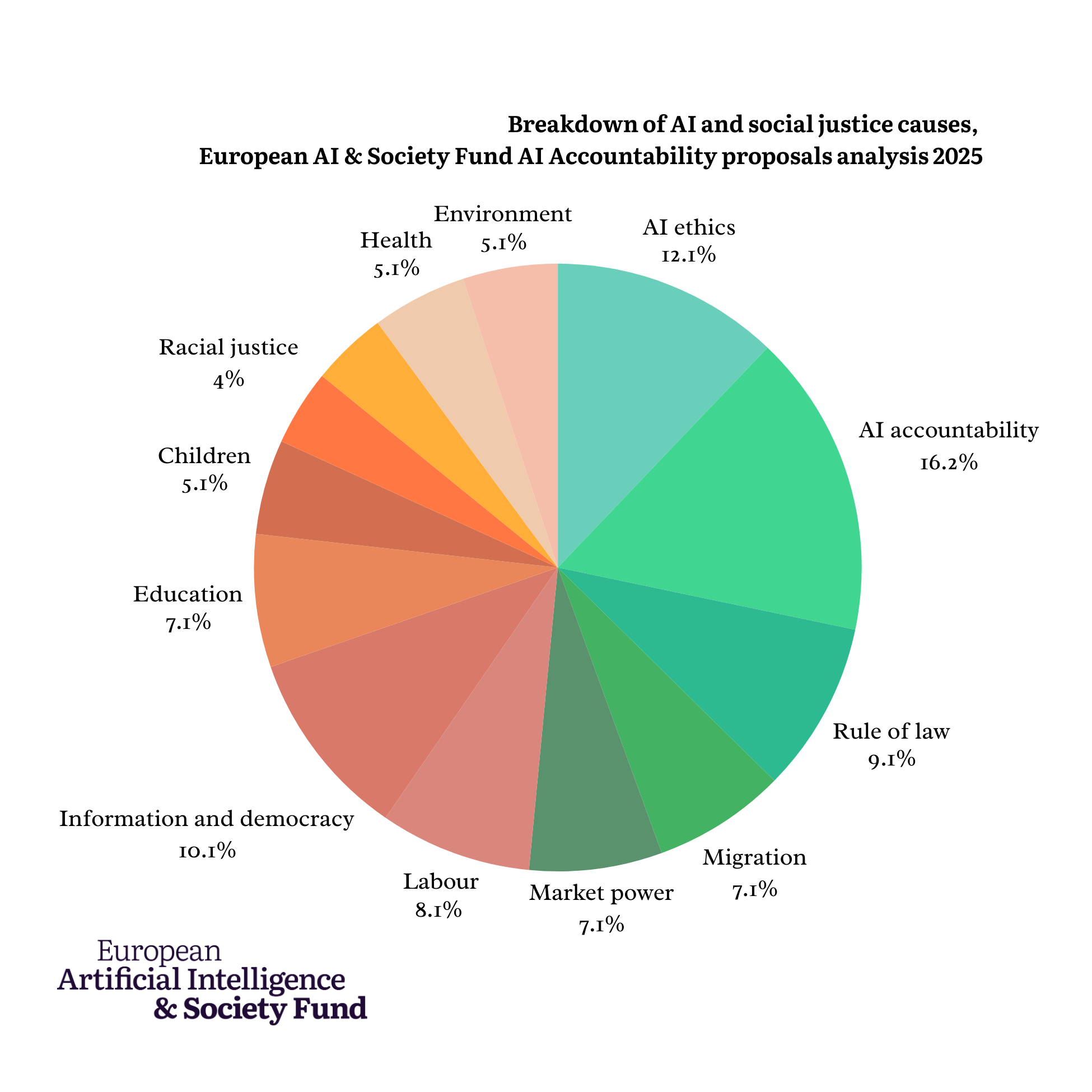

The majority of organisations who applied to the AI Accountability call for proposals were social justice organisations (74%), rather than organisations whose primary mission is to work on technology issues (26%). This is a positive development which indicates the intersections between AI and other social justice issues are now clearly understood across civil society.

By offering an open call for proposals (rather than the ‘invitation only’ approach some funders use) we got a strong sense of the diversity of the field. Proposals spanned from children’s charities wanting to fight back against social media algorithms exposing young people to harmful content; to campaigning organisations appalled by the environmental cost of AI.

Many applications were concerned about AI-enabled profiling and discrimination of minoritised communities including people with disabilities and migrants, as well as its harmful use in healthcare systems, workers’ rights and policing.

3. There is some uncertainty about how to test, apply and enforce AI regulation

Some of the European AI & Society Fund’s existing grantees fought hard to secure safeguards for fundamental rights in EU regulation. However much more work needs to be done to ensure new legal frameworks like the AI Act and Digital Services Act are stress-tested in practice.

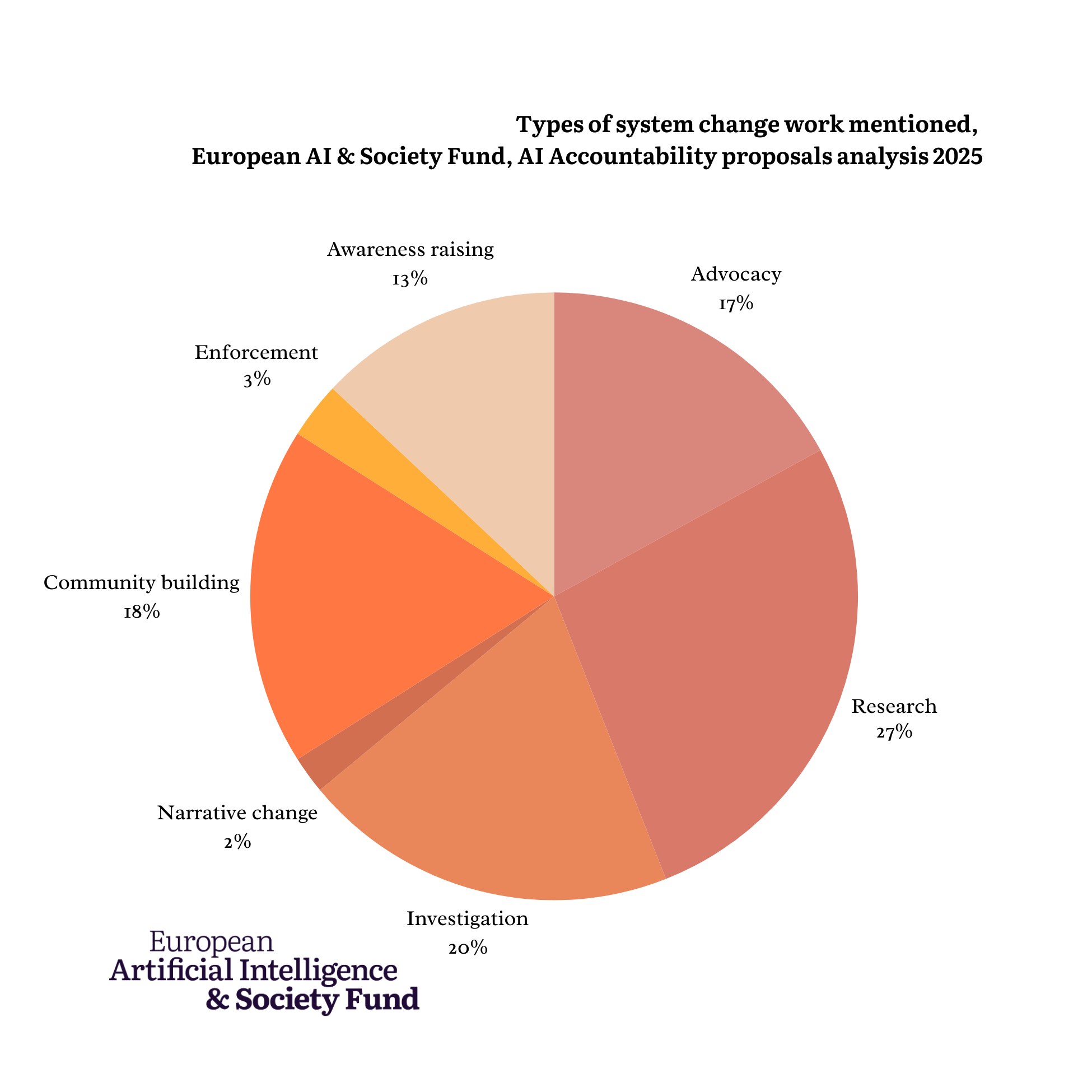

The majority of proposals focused instead on soft measures such as building new AI ethics frameworks or raising awareness about AI and its harms. Only 44% of proposals to the AI Accountability open call mentioned a legal framework as part of their project. It’s not clear whether this is due to the unfamiliarity of this fresh legislation or if there’s a need for greater legal and technical expertise in the field to understand the best interventions to achieve justice.

We also found that most proposals focused on harms caused by government abuse of AI, such as state surveillance, with few addressing the issues raised by corporate AI systems. Organisations seemed confident in identifying infringements of digital and civil rights by public authorities but were less able to articulate the societal impacts of systems such as generative AI, or how to challenge them.

4. Few projects focus on challenging big tech’s dominance

The European AI & Society Fund selected eight Global AI & Market Power Fellowship teams last year to spearhead investigations into market power dynamics in the current AI ecosystem and create a strong evidence base to design interventions in the public interest. And we were expecting to see many applications challenging big tech’s market power dominance from AI Accountability open call applicants.

In fact, only 7% explored how to challenge big tech’s dominance of the rapidly developing AI market. Yet, new laws like the Digital Markets Act, or anti-trust regulators like the UK’s Competitions and Markets Authority (CMA) or European Union’s Directorate-General for Competition (DG Comp) could all present routes to challenge big tech dominance. Some of our existing grantees are already collaborating in this area and later this year we plan to explore more ways that we can strengthen public interest groups to push back against corporate power.